The Hadoop File System (HDFS) is a distributed and redundant file system that stores your data in chunks across multiple nodes. This allows for fault tolerant data storage (a node can die without the loss of data) as well as parallel data processing. If you want to store and analyze large amounts of data, Hadoop is a great option.

I recently read a great book called Data Analytics with Hadoop, and this post is based on what I learned there. In this tutorial, I walk you through setting up Hadoop locally for experimentation. I also show you how to create a simple job that processes data in Hadoop.

Create the Virtual Machine

We’re going to starting by creating a virtual machine. Start by downloading and installing VirtualBox. You’ll also want to download the latest LTS Ubuntu Server ISO. Once VirtualBox is installed and your ISO is downloaded, go to VirtualBox and create an new virtual machine with the following parameters:

- Type: Linux (Ubuntu 64 bit)

- Memory: I recommend 2 GB

- Disk: 10GB should be enough, but I’d recommend 50GB

- Networking: Make sure your networking is set to Bridged if you want to SSH into the machine

When you start up your VM for the first time, VirtualBox will ask you to select installation media to install an OS. Use the Ubuntu server ISO you downloaded and install Ubuntu with all the default settings.

I won’t cover how to do this in detail,

but I recommend setting up SSH (sudo apt-get install ssh) so you can

remotely log into the virtual machine.

This will allow you to work from your computer’s shell,

copy-paste from your browser and switch between windows easily.

You can add your machine’s public key to an authorized key so

that you don’t have to type a password every time you log in.

Disable IPV6

I’m not sure if this is still true, but the book states that Hadoop doesn’t play well with IPv6.

To disable it, edit the config by typing (sudo nano /etc/sysctl.conf)

and at the end of the file add the commands listed here:

The settings don’t take effect until you reboot (sudo shutdown -r now). If you did this correctly,

typing (cat /proc/sys/net/ipv6/conf/all/disable_ipv6) should print out the number 1 on your screen.

Installing Hadoop

Now comes the fun part: Getting Hadoop all set up!

Start by logging in with your username, then logging in as root

(sudo su) and following the commands and instructions here:

Setting up Hadoop

For this section, you’re going to want to log in as the hadoop user with (sudo su hadoop)

and add the lines listed in this gist

to both of these files:

1/home/hadoop/.bashrc

2/srv/hadoop/etc/hadoop/hadoop-env.sh

You’ll then want to create a script to start up Hadoop by typing (nano ~/hadoop_start.sh)

and adding the content from this gist

to it. In the directory /srv/hadoop/etchadoop, create or update the following files with the corresponding contents:

- core-site.xml: https://gist.github.com/nabsul/27a9ee7d17f5f1e262999b30b5b4fec3

- mapred-site.xml: https://gist.github.com/nabsul/306d9d7cc976f9e362839594fa511421

- hdfs-site.xml: https://gist.github.com/nabsul/49b796c5a05582428fe7d479c0efb328

- yarn-site.xml: https://gist.github.com/nabsul/1462ad2d4172770de69e483b6b0bda32

Finally, we setup an authorized key and and format the name node by executing the following code:

Now let’s start up Hadoop! If you type (jps) now it should only list Jps as a running process.

To start up the Hadoop process just type (~/hadoop_start.sh).

The first time you run this command it’ll ask you if you trust these servers,

to which you should answer “yes”. Now if you type (jps) you should see several processes

running such as SecondaryNameNode, NodeNode, NodeManager, DataNode, and ResourceManager.

From now on, you’ll only need to type (~/hadoop_start.sh) to start up Hadoop on your

virtual machine, and you’ll only need to do this if you restart your machine.

Create and Run Map-Reduce Scripts

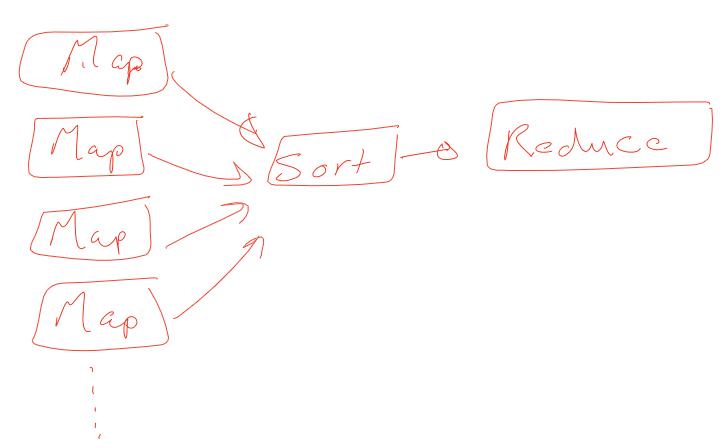

A Map-Reduce job consists of two stages: mapping and reducing. In the mapping stage, you go over the raw input data and extract the information you’re looking for. The reduce stage is where the results of mapping are brought together for aggregation. It’s important to remember that these processes are distributed. In a real Hadoop cluster, mapping happens on different machines in parallel and you need to keep this in mind when writing your code. For the purpose of this tutorial we can visualize the process as follows:

Different nodes in the cluster process different chunk of data locally by running it through the mapper. Then, all the outputs from the different mappers are combined and sorted to be processed by the reducer. More complex arrangements exist, with multiple intermediate reducers for example, but that is beyond the scope of this tutorial.

Getting the Scripts

Now that we have Hadoop up and running on our sandbox,

let’s analyze some logs! You’ll want to be logged in as the Hadoop user (sudo su hadoop).

Go to the home directory (cd ~) and checkout the sample code by running

(git checkout https://github.com/nabsul/hadoop-tutorial.git).

Then change to the directory of this this tutorial by typing (cd hadoop-tutorial/part-1).

In this folder you’ll find sample logs to work with and four pairs of _mapper.py and _reducer.py scripts, which do the following:

- count_status: Count occurrences of the status field in all the logs

- status per day: Same as the above, but provides the stats per day

- logs_1day: Fetches all the logs of a specific day

- sample: Extract a 1% random sample of the logs

Running the Scripts Locally

The scripts provided can either be run locally or in the Hadoop cluster.

To run them locally, execute the following from inside the part-1 folder:

1cat sample_log.txt | ./count_status_mapper.py | sort | ./count_status_reducer.py

To run any of the other jobs, just substitute the mapper/reducer scripts as needed.

Uploading the Logs to Hadoop

Before running a job in Hadoop, we’ll need some data to work with. Let’s upload our sample logs with the following commands:

hadoop fs -mkdir -p /home/hadoophadoop fs -copyFromLocal sample_logs.txt /home/hadoop/sample_logs.txt

Running a Job in Hadoop

Finally, type the following to run the a job in Hadoop:

1hadoop jar $HADOOP_JAR_LIB/hadoop-streaming* \

2 -mapper /home/hadoop/hadoop-tutorial/part-1/sample_mapper.py \

3 -reducer /home/hadoop/hadoop-tutorial/part-1/sample_reducer.py \

4 -input /home/hadoop/sample_logs.txt -output /home/hadoop/job_1_samples

If that runs successfully, you’ll be able to view the job results by typing

(hadoop fs -l /home/hadoop/job_1_samples) and (hadoop fs -cat /home/hadoop/job_1_samples/part-00000).

Another interesting thing to look at is the Hadoop dashboard, which can be found at http://[VM’s IP Address]:8088.

This will provide you with some information on the jobs that have been running our your virtual cluster.

Conclusion

At this point, you might be thinking: “I just ran a couple of python scripts locally, and then submitted them to Hadoop to get the same answer. What’s the big deal?" I’m glad you asked (and noticed)! It is true that Hadoop gives you nothing interesting when you’re only working on a few megabytes of data. But imagine instead that you had a few terabytes of data instead. At that scale:

It would be very hard to store that information on one machine It would take a very long time to run your python script on one giant file You would might run out of memory before it getting through all the data If that one machine crashes, you could lose all or part of your data That’s where the Hadoop environment is useful. Your terabytes of data are spread across several nodes, and each node works on a chunk of data locally. Then, each node provides its partial data to produce the final result. Moreover, the beauty of using python the way we just did is that you can first test your script on a local small sample to make sure it works. After you debug it and make sure it works as expected, you can then submit the same code to your Hadoop cluster to work on larger volumes of data.

I hope you enjoyed this tutorial. In part 2 I plan to tackle the topic of: “How do I get my data into Hadoop?”. Specifically, we’ll look into setting up Kafka to receive log messages and store them in HDFS.